Abstract

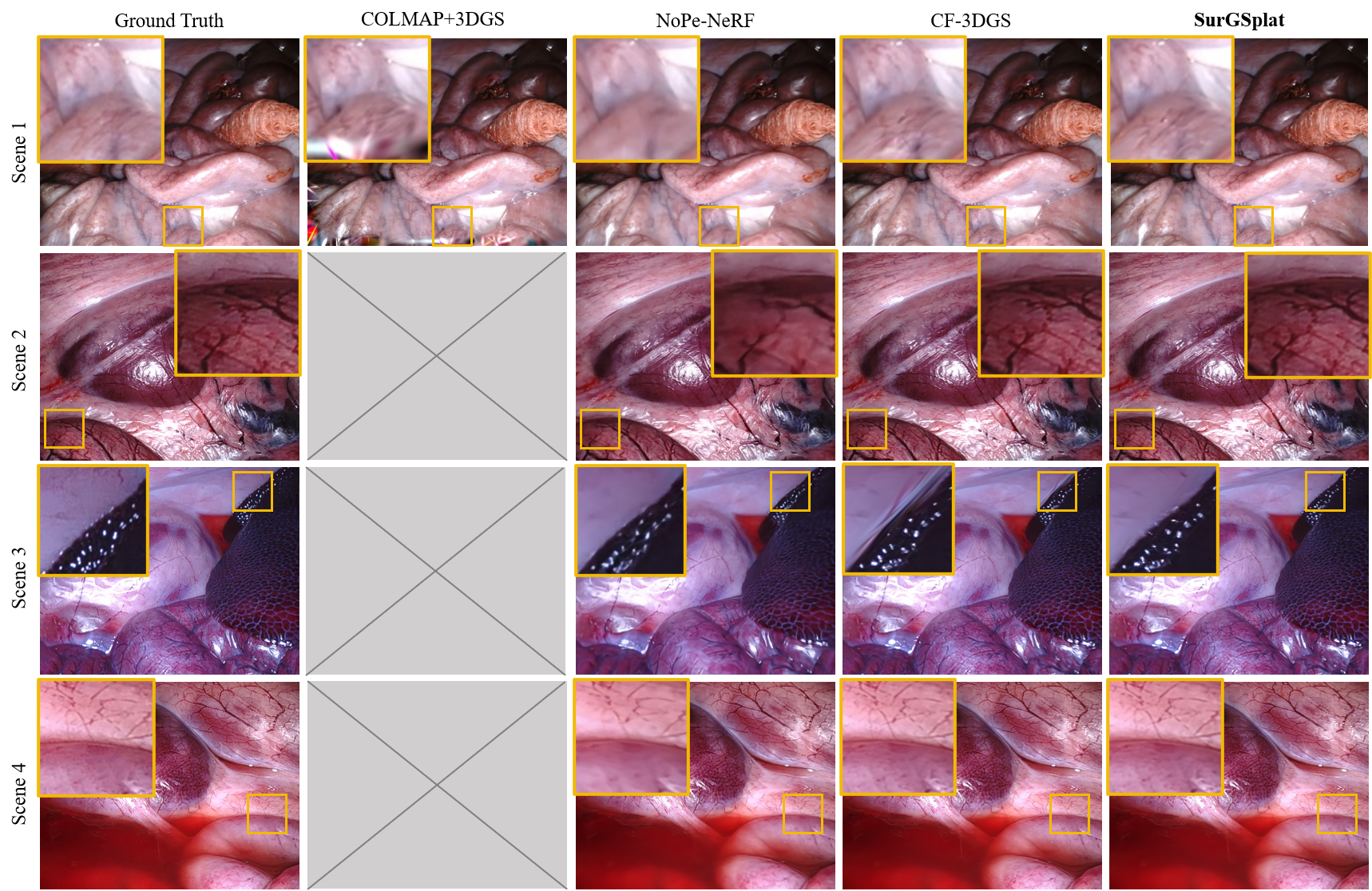

Intraoperative navigation relies heavily on precise 3D reconstruction to ensure accuracy and safety during surgical procedures. However, endoscopic scenarios present unique challenges, including sparse features and inconsistent lighting, which render many existing Structure-from-Motion (SfM)-based methods inadequate and prone to reconstruction failure. To mitigate these constraints, we propose SurGSplat, a novel paradigm designed to progressively refine 3D Gaussian Splatting (3DGS) through the integration of geometric constraints. By enabling the detailed reconstruction of vascular structures and other critical features, SurGSplat provides surgeons with enhanced visual clarity, facilitating precise intraoperative decision-making. Experimental evaluations demonstrate that SurGSplat achieves superior performance in both novel view synthesis (NVS) and pose estimation accuracy, establishing it as a high-fidelity and efficient solution for surgical scene reconstruction.

Method

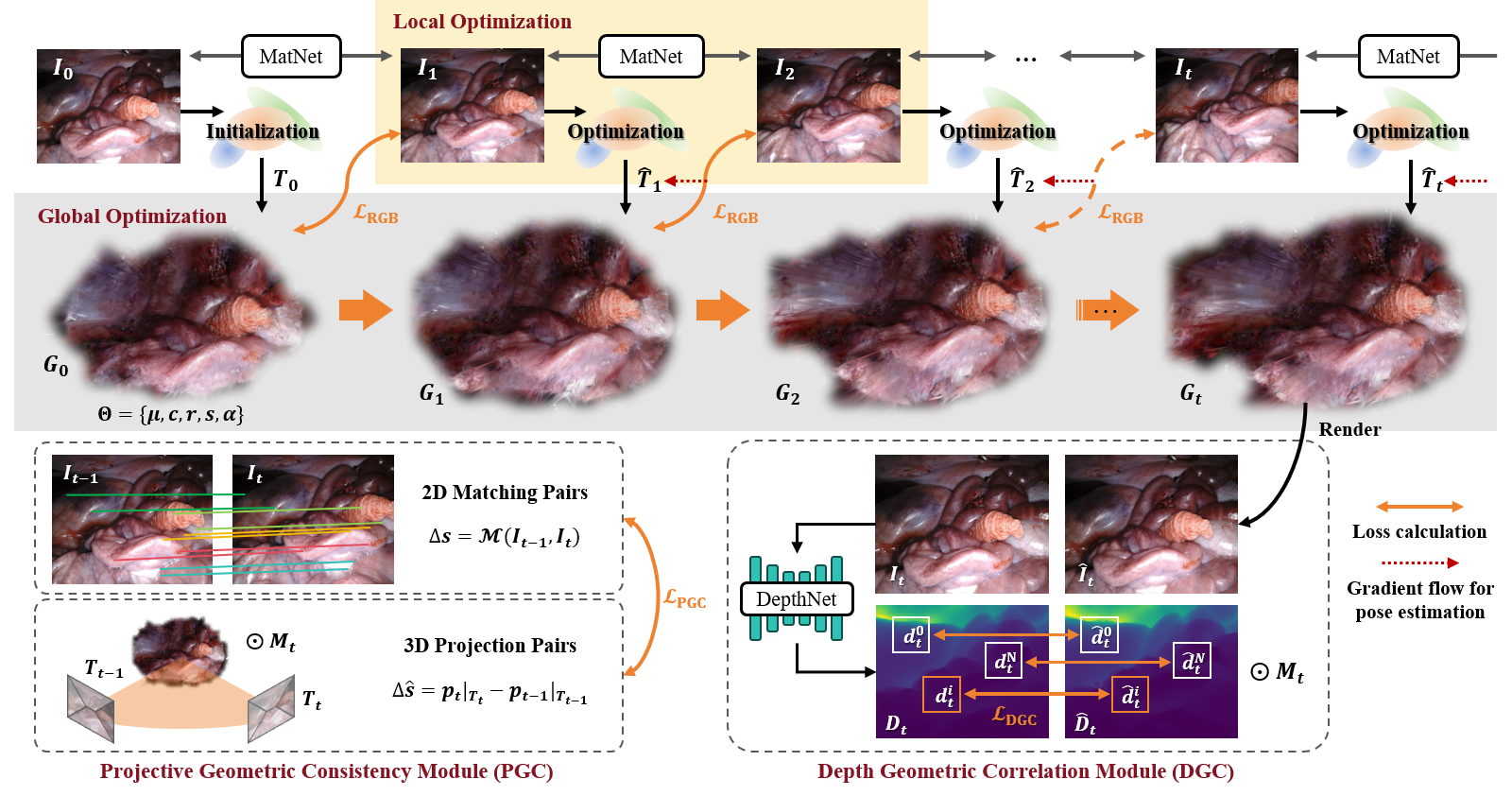

Pipeline of SurGSplat for high-fidelity surgical scene reconstruction. Starting with an initial depth map generated from a single image I0, our method iteratively optimizes camera poses and global 3D Gaussian representations. Each frame is processed by constructing a local 3D Gaussian set and optimizing the relative pose via neural rendering. The accumulated poses are used to refine the global 3D Gaussians based on geometric consistency losses.